NASA RASC-AL Special Edition: Moon to Mars Ice and Prospecting Challenge

National Finalists

Cal Poly and nine other qualifying universities faced off in September 2021 at the hampton Convention Center in Virginia. Designs were reviewed by a panel of judges and teams were scored based on how well they met the design constraints and how effectively they accomplished the competition tasks.

Cal Poly won the award for the most accurate digital core, meaning our system yielded the soil density profile that most closely matched reality.

One Sentence Summary

The NASA RASC-AL Moon to Mars competition challenges student teams to develop a lightweight, durable, and hands-off method for extracting water from Martian/lunar subsurface ice layers while mapping soil density profiles.

My Contributions

My role on this Project was as Mechatronics Team Lead as well as Software and Controls Lead. I managed my sub team and was primarily responsible for: Receiving feedback from our project advisor and updating him with our team progress, assigning tasks to my team members, acting as a liaison between the two sub teams, and coordinating between my team and NASA.

In addition to these responsibilities, I also selected and purchased most electronics components. I took care of budgeting formalities and money management for the purchases I made. I worked with our Electronics Lead, Tyler Guffey, to set up all electrical components as they came in. I wrote software to interface with these components for all motion control of the project as well as the entire drilling subsystem. I also worked with Jacob Everest on writing software to control the water extraction and processing subsystem.

Finally, I was responsible for integrating all code components into a finite state machine and ensuring cooperative function between vital data inputs. I also personally performed all iterative tests of the system and analyzed and formatted the data. This project was my baby, and my large contributions came from a place of passion for the competition and interest in the technology. Below is the GitHub repository with all source code for the project. Check it out if you're interesed!

Overview of Challenge and Our Design Direction

Future interplanetary expeditions are dependent on the availability of clean water and this project aims to accomplish this task. Cal Poly's 2021 team, STYX & STONES, has utilized the background research from relevant patents and journal articles to consider brainstorming potentially viable solutions. Based on these solutions for each subsystem, the team converged the ideas using a series of decision matrices into a final design direction.

To visualize the requirements, the team created a list of customer needs, a House of Quality diagram, and an engineering specifications table. Additionally, the STYX & STONES team discussed the design process they plan to follow including major project milestones. Specifically, the team plans to excel in collecting more than five quarts of water autonomously while successfully identifying the overburden layers – tasks that previous teams have struggled with.

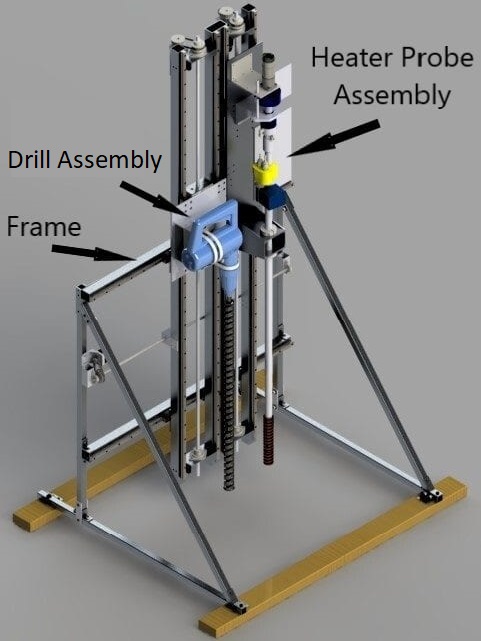

The team’s design direction includes two main components: a masonry drill bit and an auger-heater probe hybrid tool. The masonry drill bit creates a hole in the overburden using the force from a rotary hammer. The heater probe tool then moves to align with the hole and be driven into the loosened overburden using the force of a small gear motor. The heater probe then melts ice using a hot waterjet and delivers water to a resevoir tank via a peristaltic pump and a two-stage filtration system.

To verify the design, the team completed a multitude of analyses and tests for each subsystem and the prototype as a whole. Through drilling tests, the team found that the rotary hammer and masonry bit can easily cut through all overburden layers while keeping weight on bit (WOB) below 150N. Similarly, the load cells attached to the drill carriage were tested and proven to be accurate at recording WOB data and providing feedback to the drill controller. Furthermore, the load cells proved successful at recording accurate WOB data that can be analyzed to determine overburden composition. The pumping system was also tested and was capable of effectively moving water through all filters and delivering fluid to the waterjet. More tests were completed to verify the heater probe tool; these tests included controlling heater temperature, melting ice, expelling water through the waterjet, and removing loose material from the hole.

Team Dynamics and Project Timeline

Our eight-person team was broken into two four-person sub teams: the Mechanical team and the Mechatronics team. As the name might suggest, all eight team members were Mechanical Engineering students at Cal Poly. Further, the four students on the Mechatronics team concentrated in Mechatronics, and the four students on the Mechanical team had general concentrations.

During the first quarter of our design project, all eight team members collaborated on a design direction and preliminary subsystem designs. In November 2020, we submitted our

Project Plan

to NASA for consideration. We qualified for a $5k grant as well as progressed into the next stage of the competition along with 15 other teams. Our team continued to refine our design and began manufacturing prototypes in the early months of 2021. By March 2021, our team had completed initial tests of the hammer drill subsystem and submitted our

Midpoint Review Report

and

Video.

From March until September 2021, the team continued manufacturing, assembling, and writing code for the project. The first full-system test was completed in June 2021 before the team members split up for Summer vacation. The first successful full system test was completed in Semptember 2021, a few weeks prior to the competition.

Cal Poly Mechatronics Student SUMO Bot Competition

One Sentence Summary

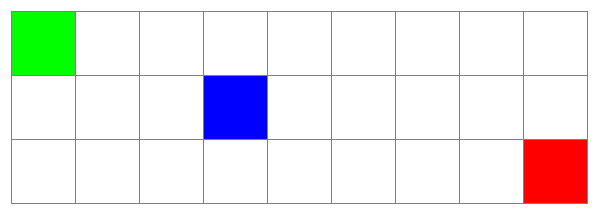

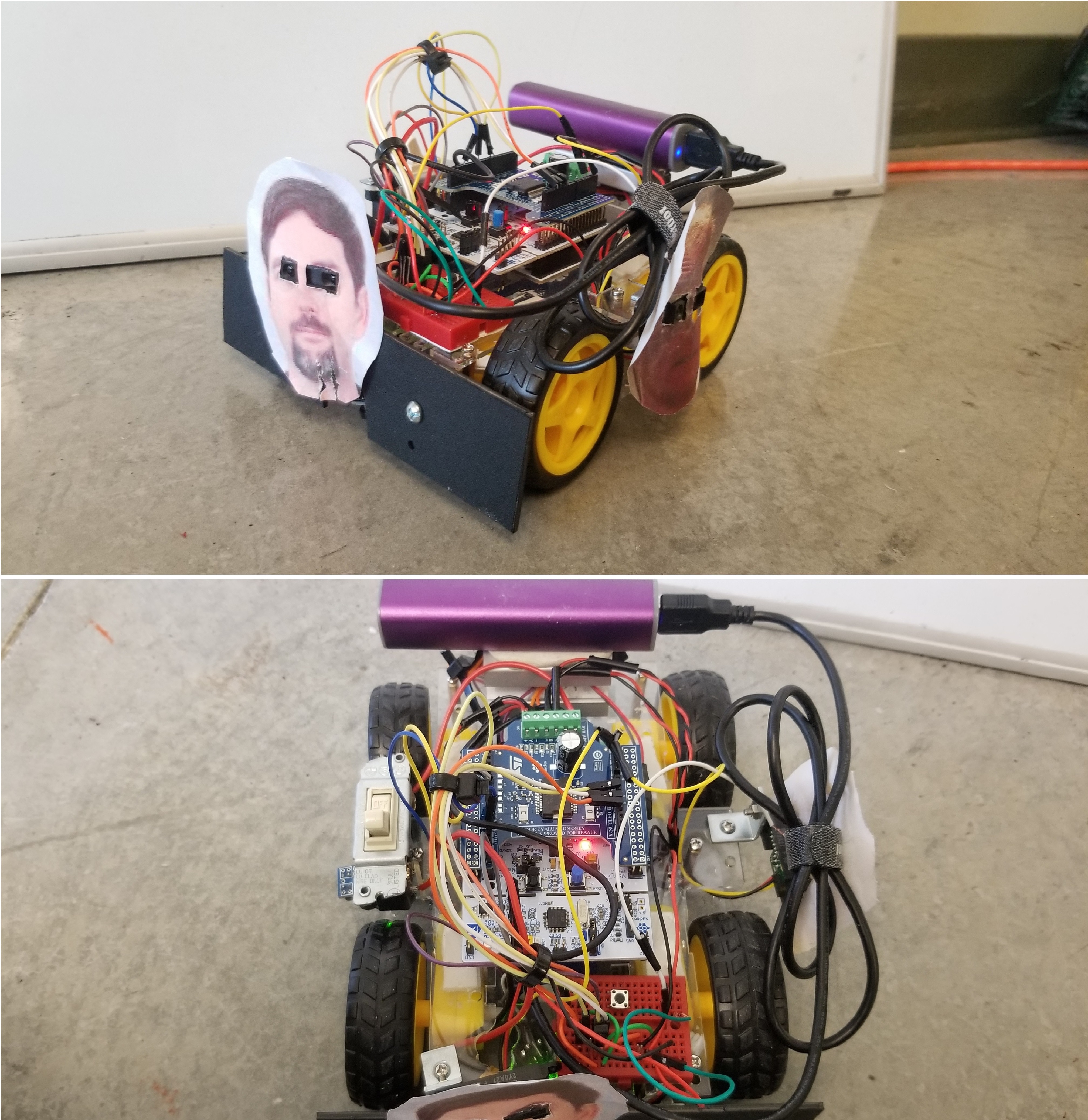

The Cal Poly Mechatronics Student SUMO Bot Competition tasks 2-person teams with building and controlling an autonomous “SUMO” bot, built with MicroPython, to fight in a small arena against another SUMO bot and attempt to knock it out of the rink.

My Contributions

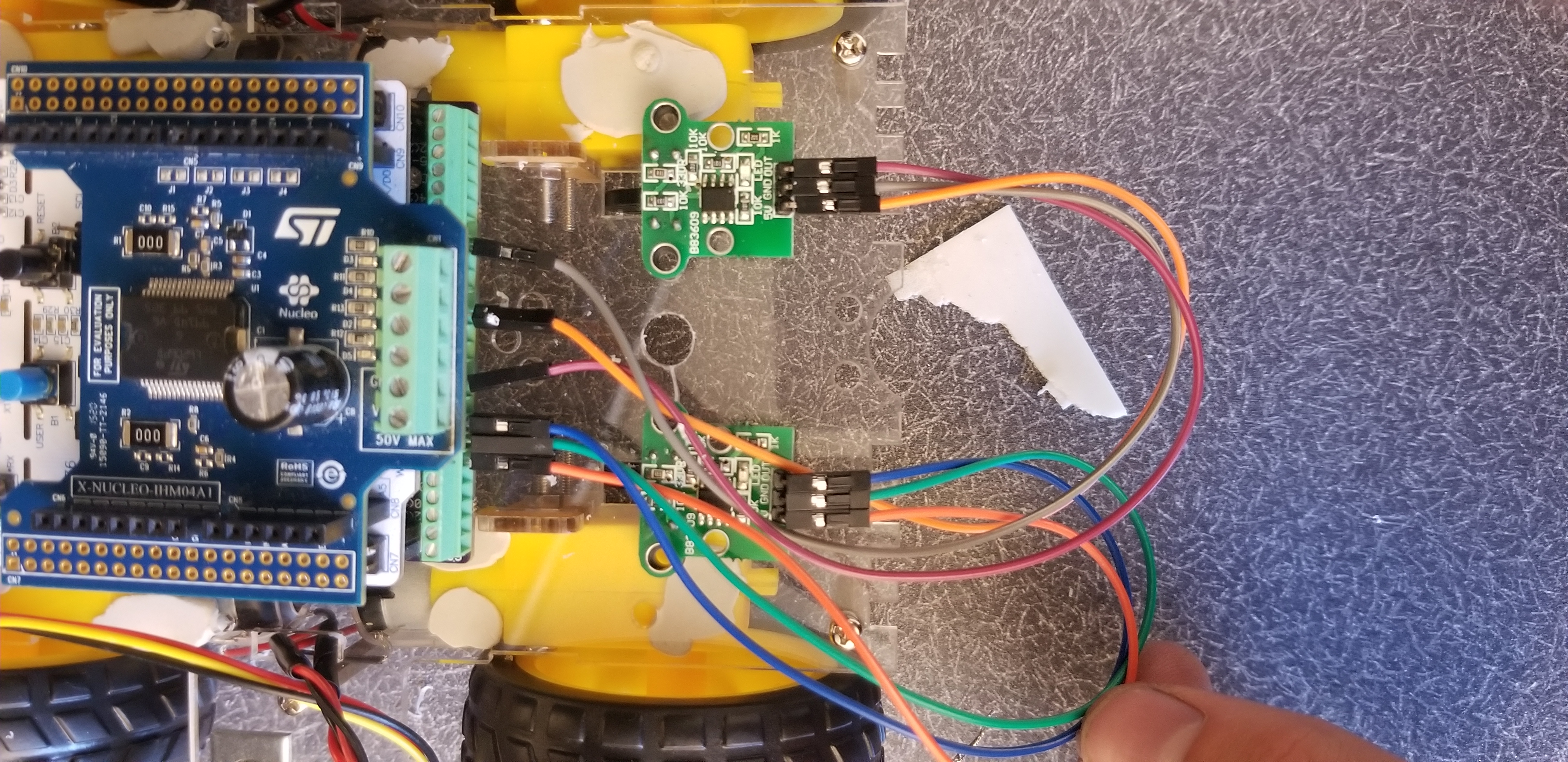

My role on this project was as lead programmer. Each team member contributed to the budgeting, hardware assembly, and strategy for the SUMO bot. I was the primary programmer, so I was responsible for interfacing with writing functions and interfacing with the sensors. The functions ranged from controlling the motors attached to the bot’s wheels to reading encoder values. A proportional controller was used for the motor control. I am also proud to have been the only student in the class to complete a brand new lab module in my Mechatronics class while SUMO bot building was underway. I programmed functionality into an infrared receiver by reading different packet frequencies from a standard IR remote.

Competition Overview

The Cal Poly Mechatronics Student SUMO Bot Competition challenged 2-person student teams to build and program a small, autonomous robot that fights against other robots in a battle over territory. A small rink was set up and bordered with electrical tape. There were two pieces of tape in the center of the rink to mark the starting positions for each robot. On the “GO” signal, each team remotely started their robot and the competition began! The goal was to remain within the rink and outlast your opponent. Additionally, there were restrictions on the footprint of the robot, the total power of the motors, and destructive accessories that caused damage to the other robot or interfered with its sensors.

My team’s strategy hinged on the quality of parts we purchased. From the offset, we decided to try and minimize costs and see where it got us. This was in part to minimize costs to ourselves, and in part an exercise in restraint. Often with hobbyist electronics, it is easy to purchase in excess “just in case” or to replace parts as necessary. While this is not necessarily a bad thing, my partner and I wanted to challenge ourselves to planning in advance so that extra purchases would be unnecessary. I even scavenged some useful parts from a box someone threw away in my apartment complex. Based on this ideology, our team recognized the motors we purchased would be lacking in torque compared to some of the other teams. So our strategy was to constantly run around the rink, away from the opponent, and try to bait them toward the edge.

We had a startup sequence when the competition started to make a quick turn and head to the outer ring of the stage. Notably, our group was the only group whose SUMO bot correctly responded to a remote command. Because no other SUMO bot would move when the startup button on the remote was pressed, that requirement for the competition was scrapped. Once on the outer ring, our bot would start traveling in a circle to avoid easy detection. If it sensed an object in front of it (a SUMO bot), it would turn around and go the other way. If it sensed an object traveling towards it from the side, it would speed up or slow down to throw it off. Think of a matador baiting in the bull with a colorful cape. We tied against all competent opponents, but our strategy was good at taking advantage of opponents who were not careful in the way they programmed their bot.

We placed in the middle of the standings, coming in at 5th out of 10 teams. Overall, I was happy with the performance of our robot. The total cost to make it was just under $100 and the average cost across all teams came in at about $250. A significant savings!

Artificial Intelligence Project Gallery

Contact me for access to the full GitHub Repository! Kept private at the request of the University.

Summary

This is a collection of Artificial Intelligence project I have completed in python. An extensive description is included below of how each project works, the background knowledge needed to understand them, and the reflections I have made with each one. Source code is available to run and test in the GitHub respository.

| Project Name | Description |

|---|---|

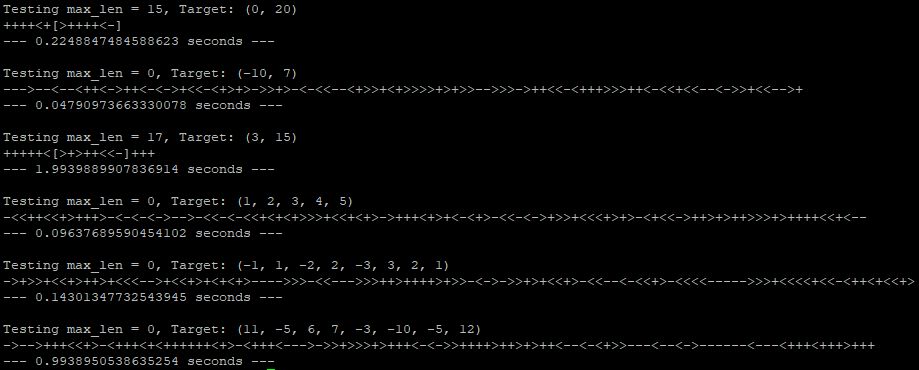

| Genetic Algorithm | This program employs a genetic algorithm to write small programs that fill contiguous arrays in memory with target integers |

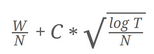

| 3D Tic-Tac-Toe Computer | This program is a 3D Tic-Tac-Toe computer that utilizes Monte Carlo Tree Search to evaluate the best moves to play against you |

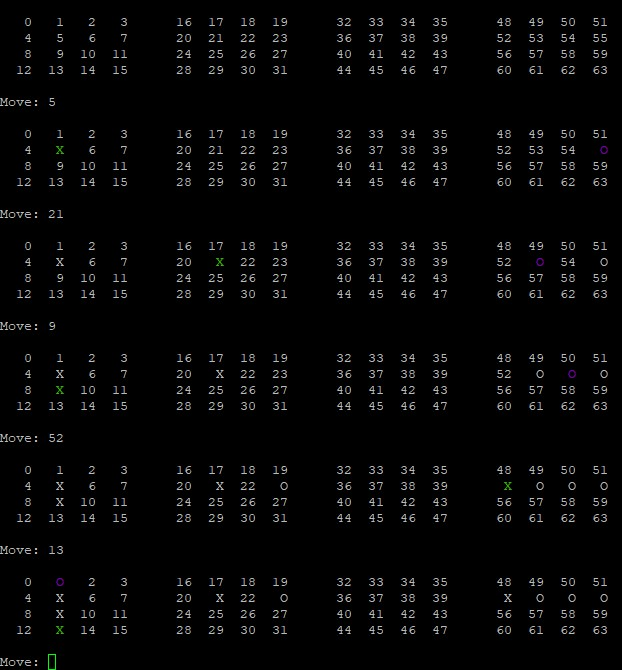

| Mine Sweeper Solver | This program is a cheat for your difficult Minesweeper games. It uses AC-3 for constraint propogation to evaluate a Minesweeper board and find all the mines |

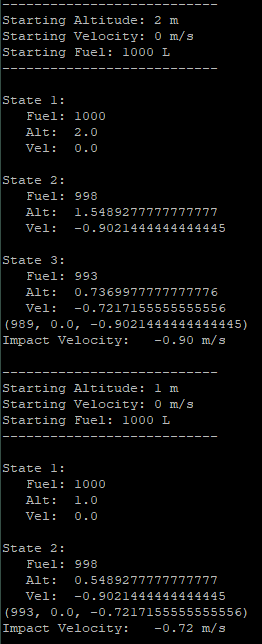

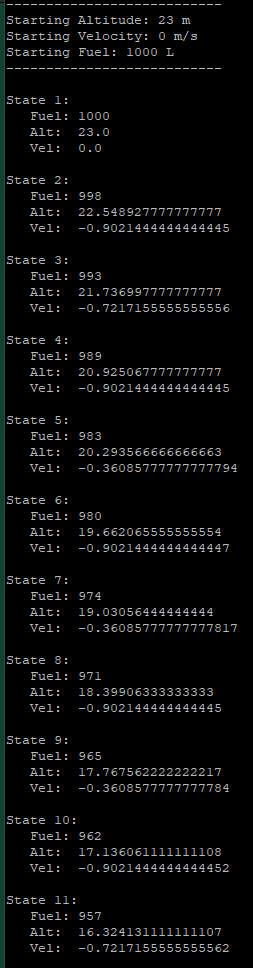

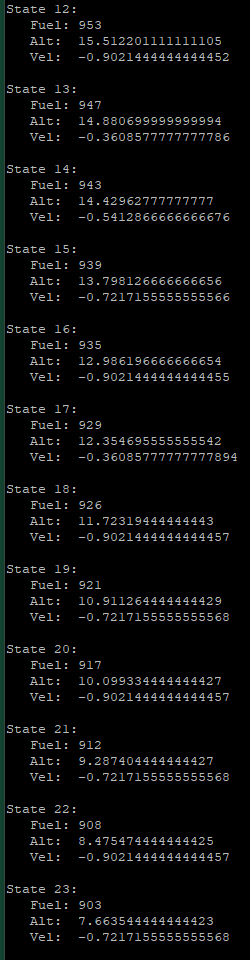

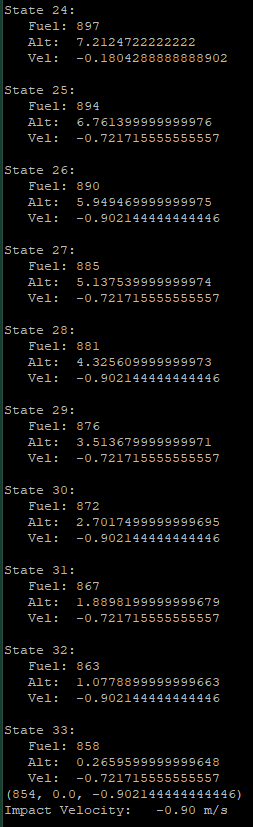

| Moon Lander Q-Learning Agent | This program uses Q-Learning to train an agent to land a Lunar Module on a variety of celestial bodies at a safe speed while conserving its finite fuel |

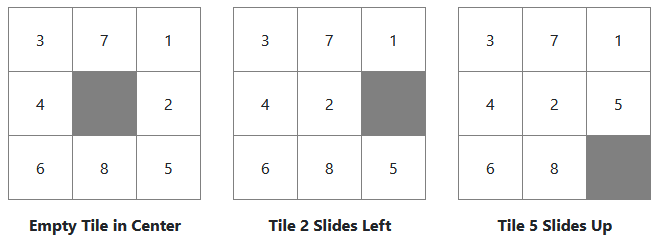

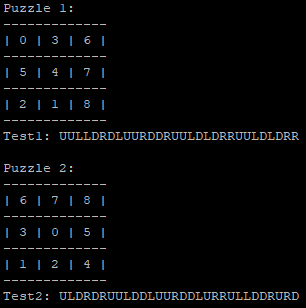

| Sliding Tile Puzzle Solver | This program uses A* search along with a couple search heuristics to solve Sliding Tile Puzzles in the fewest possible moves |

Systems Programming Project Gallery

Contact me for access to the full GitHub Repository! Kept private at the request of the University.

Summary

This is a collection of projects relating to systems architecture and operating systems. All projects are written in C and a description of them, as well as some demos is given below. Source code is available to view by request in a private Github Repository.

| Project Name | Description |

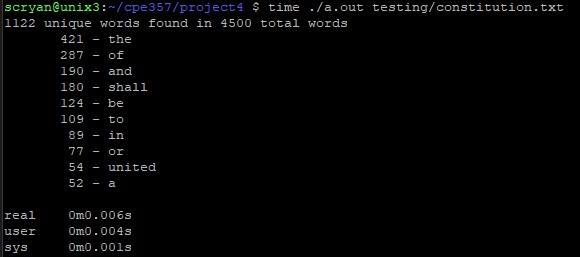

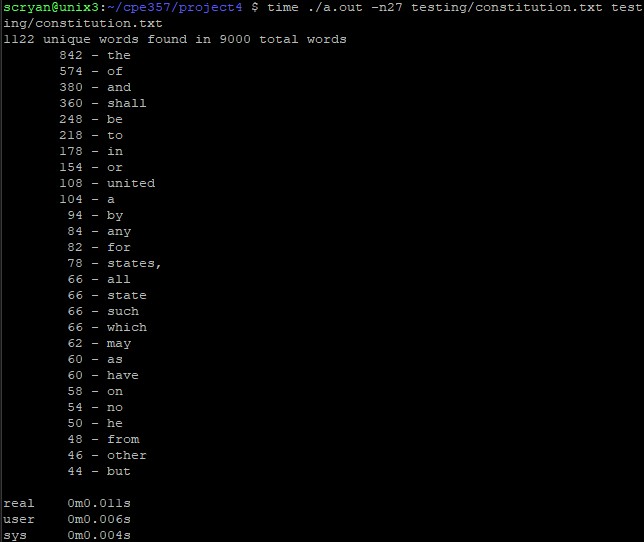

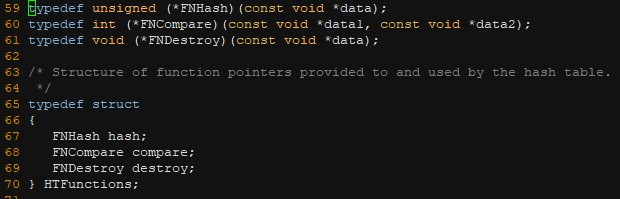

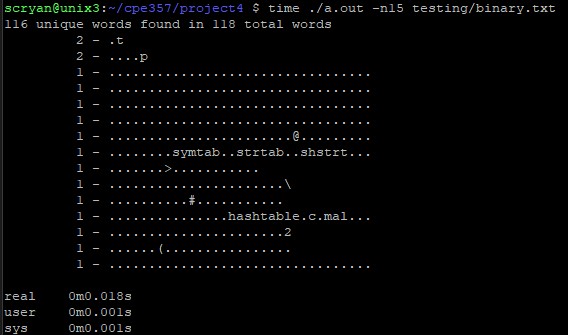

| Word Concordance | An OOP style hash table built from scratch that can store any data type. In this case, it is used to store a word concordance |

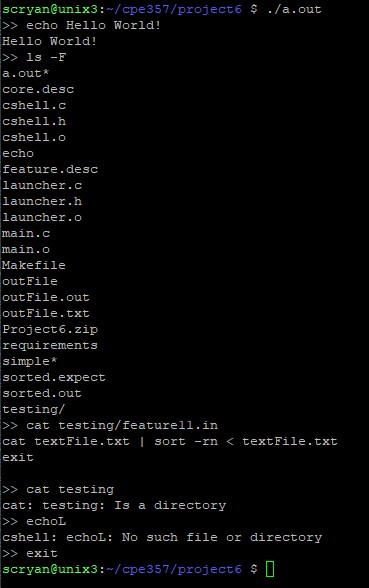

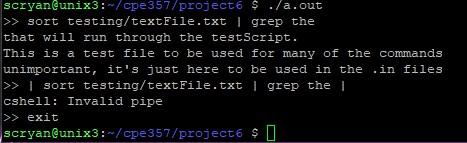

| C Shell | A simple C shell that exists in the terminal command line |

| LZW Compression | Employs a dictionary-less compression algorithm with a customizable recycling factor |

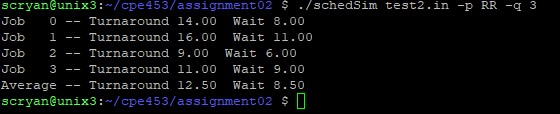

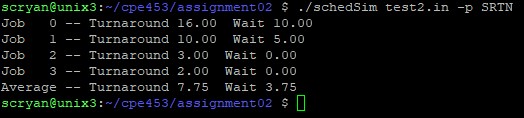

| Scheduling Simulator | User Interface for determining turnaround time, response time, and wait time for round robin, first in first out, and shortest remaining job next CPU scheduling algorithms |

Cal Poly Roborodentia Student Competition

Description

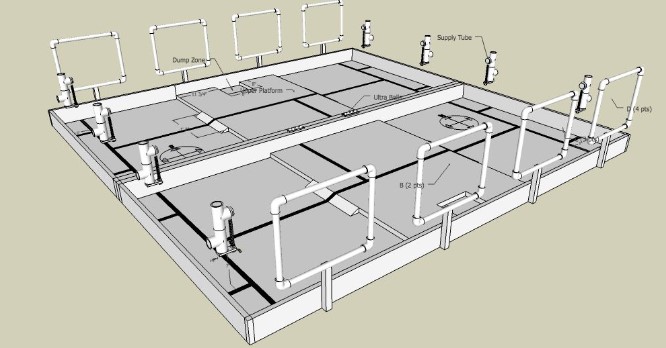

The Cal Poly 2018 Roborodentia Competition was a head-to-head double elimination tournament where the object is to build an autonomous robot to fire Nerf Rival Balls into targets for points. The robots had strict specifications which will be explored in the next section. The robots competed on an 8’ x 8’ course with 4” high walls surrounding the edges and along the center. Black lines made from electrical tape were laid out along the field to facilitate the use of line-tracking sensors. The center of the game field was a raised platform with a 12” wide ramp on either side to incentivise a solution to having sufficient torque and friction to traverse it.

Four targets made from pvc pipe and corner joints were located on the back wall of each side of the game field. Scoring with these targets and an additional dump zone is discussed in another section. In addition to the targets, two supply tubes were on each side of the arena where the robot could go to refill its supply of balls.

Figure 1. This is a CAD model of the game field showing the PVC targets and supply tubes as well as the black lines and raised platform.

Robot Specifications

In addition to being fully autonomous and self-contained, robots must conform to the following specifications:

1. Robots must have a 12” x 14” footprint or smaller at the start of the match. At any point during the match it cannot exceed a 14” x 17” footprint.

2. The maximum height for a robot at the start of the match is 14”, but there is no restriction after the start of the match.

3. Robots cannot disassemble into multiple parts.

4. Robots cannot damage the course, balls, or targets. They cannot intentionally jam an opponent's sensors or impede the operation of an opponent’s robot.

5. Balls may not be modified or have residue on them after being picked up.

6. Shooting mechanisms must limit balls to be shot at less than 50 feet per second.

7. A robot may not be airborne or deflect opponent shots.

If a robot does not comply with any of these rules, it would be disqualified from the tournament.

Match Details and Scoring

A team is assigned to each side of the game field and must start their robots on the tape intersection marked on the ground. Matches last three minutes, but can be ended early if both teams agree. Teams can pick up and restart their robot during the match, but in return an increasingly large bonus is given to the opposing team for each restart. Other than a single press of a button at the start or during a restart, robots must otherwise not be touched or controlled during the match. The robot that scores the most points in the allotted time wins the match.

Each target is given a different point value, with targets A, B, C, and D being worth 1, 2, 3 and 4 points respectively. The values are known prior to the match to give teams options when it comes to maximizing score. However, for each set of balls (a ball scored in each of the 4 targets), an additional 10 points is awarded. Teams can also be rewarded for half-sets from targets {A, B} and are worth 5 points. The dump zone mentioned before can be a quick, though inefficient, way of scoring points as well. Each ball in the dump zone is worth 0.5 points.

Penalties are given to robots for a variety of offenses. If a robot breaks anything, its score is multiplied by 0.5. If it attempts to damage an opponent's bot, does not move for the first 20 seconds, or a robot exceeds the size restrictions during a match, it is disqualified. If both bots stop traveling for 30 seconds at any time, the match ends.

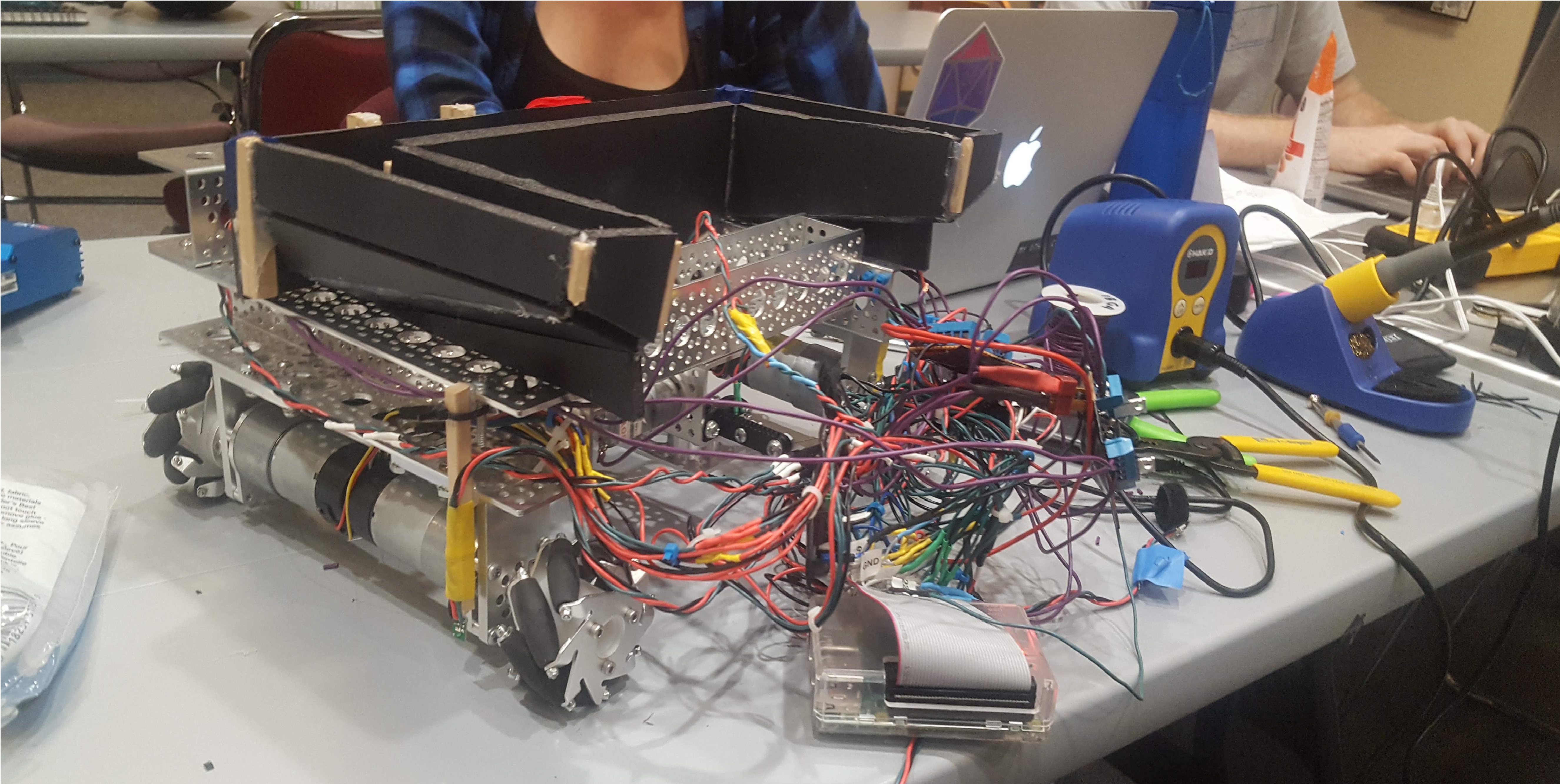

Reflections

We placed in the middle of the bracket for this tournament - 5th place. We used a trough made from foam board to funnel balls from the collection tubes into our launching mechanism. We launched the balls similar to how a pitching machine works. Two rubber rollers connected to powerful motors revolved in opposing directions to shoot the balls as they were loaded into the launching chamber. Our frame was made from perforated aluminum to balance structural integrity with weight savings. We used 4 motors to control 4 omni-directional wheels made from diagonal rolling cylinders. 6 line sensors were used to track the black lines laid out on the game field. A PID controller was implemented for the motors.

Our strategy revolved around a behavioural loop of: Shooting all loaded balls for a short in a fixed location, moving to a new location to shoot the next round of balls into the next target, refilling with balls at the supply tubes, and repeating the process for the next two targets. Mathematically, getting full sets is the best way to maximize points so long as you can program the functionality into the bot successfully.

Our robot was controlled with a raspberry pi and written primarily in C and C++. Unlike my other projects, I was not the primary programmer. I was involved with the design direction, part selection, and electrical work. I worked on a team with a Computer Engineer, Software Engineer, and another Mechanical Engineer. This project served as a foundation that furthered my interest in robotics and autonomous systems. I worked on this project while I was a second year student, which was before I had any Mechatronics or Computer Science curriculum.